Humans have long been a curious and exploratory species. New knowledge has enabled people to create and improve on existing things. Many scientific endeavours have been made possible by incrementally improving on an invention. Leveraging each emerging know how to good use. It almost feels like human beings have stumbled and experimented their way to inventions and technological progress. We worry about impact, safety , ethics and regulations much after the inventions have become mainstream. Can we afford to not discuss ethics in AI right upfront?

Ecosystem for cars

Consider the story of the invention of the car. The first model with copies was produced by Karl Benz in 1885. Henry Ford mass produced them closer to 1908. The first car did not have windshields, doors, turn signals or even a round steering wheel. In fact, it probably looked anything but, like the car of today.

Slowly came features of speedometer, safety belts, windshields and rear-view mirrors. However, the first societal rules of driving a car came much later. The turn signal was perfected in 1939. The first version of a speed breaker appeared in 1906. Yet it was Arthur Holly Compton, a Nobel Prize winner (for Physics though) who studied and improved the speed breaker in 1953. It took the ‘Vienna convention on Road traffic’ in 1968 to frame the first traffic rules for driving cars. Inspite of it many countries did not sign up for this convention and continued as is.

The first multi country rules governing the driving of a car emerged a full 60-80 years after cars had been introduced and had become mainstream! Not that accidents hadn’t happened in the interim. The first accident was reported in 1891 itself. In addition, the first death due to an accident was in 1896 at Ohio. Many many accidents were reported in the period. Yet we humans were slow to respond with safety regulations and guidelines.

Ecosystems for the Internet

Our response as a society to cars, is not a one-off occurrence. Think of how we stumbled and explored our way into the internet. We imagined that the internet would allow everyone to have a voice. We were naïve to think it would not have any downsides and we did not build protocols to guide and leverage. Part of the problem is that no one including the makers of the internet and tech world knew their work would lead to such dramatic effects. We did not fully comprehend what the internet was capable of doing. As the tech world reels from a hailstorm of crises around privacy, misinformation and monopoly , we are left wondering about the choices we made with the internet decades ago.

Today we find ourselves at the same crossroads with Artificial intelligence, as we did with the internet 50 years ago. We do not grasp fully what AI is capable of.. There is much to AI and how our futures will evolve. That it will be a strategic advantage is a given. That it needs to evolve is also a given. Given all of these, framing universal laws even as the technology evolves requires collective will and compromise. And the rate at which the technology is changing, framing a common code is a bit like changing the engines whilst flying the plane! Guess what, we are just about buckling up.

Why is Ethics in AI important?

We are at the cusp of a major technical inventions and revolution with Artificial intelligence. We paid with lakhs of lives for the delay in coming together to frame commonly accepted rules for driving . With AI, we may not have such luxuries of making a payment, however costly! While we continue to survive and question our choices with the internet. We may not have this opportunity if we allow AI to become mainstream without the guardrails firmly in place.

Global interest in framing guidelines for AI

The European Union has taken the first step in framing principles which will govern AI. The guidelines and principles framed by the EU are a compass. These principles are guardrails rather than a destination. Hence will not prevent nor delay developments in the field of Artificial Intelligence. However the Principles of ‘Ethics in AI ‘ will ensure that AI systems have the larger good of humankind built in.

Claiming first mover advantage in becoming a world governing body on AI seems to be on many agenda’s. Another global body, OECD (Organisation for Economic Co-operation and Development) has endorsed the EU guidelines and also released similar guidelines of their own. Plus the WEF (World Economic forum) has formed an AI council and wants to be the world governing body on AI. More on that in a separate post.

EU and ‘Ethics in AI’ guidelines

The European Union has suggested a non-exhaustive framework to help apply the principles of ensuring ‘Ethics in AI’. They are requesting firms and experts to share inputs upon implementation of the framework. Conversations about AI is an important element that EU is trying to encourage.

The guiding principles for creating trustworthy Artificial Intelligence and ensuring ‘ethics in AI’ as laid down by the European Union are:

1. The Principle of respect for human autonomy

Humans must not be unjustifiably coerced, deceived, manipulated or even herded. AI should be designed to augment and complement human cognitive, social and cultural skills. It must aim for the creation of meaningful work

2. The Principle of prevention of harm

AI must not cause or exacerbate harm to human beings (dignity, mental, physical harm). The system should be robust enough to guard against malicious use as well.

3. The Principle of fairness

The principle of fairness in AI means that Artificial intelligence systems should leave no room for discrimination and unfair biases while designing .AI must provide equal opportunities in terms of access to education, good, services and technology must be fostered through AI. Additionally humans must have choices as well as redressal mechanisms against AI.

4. The principle of explicability

The principle simply means that AI must be transparent. The capabilities and purposes of AI systems must be openly communicated. Here is a quote from the guidelines report: “Decisions by AI, as much as possible must be explained to those directly and indirectly affected. If it is too difficult to explain the algorithm then the makers of AI should ensure traceability, auditability and transparent communication on system capabilities, thereby protecting fundamental rights of humans”.

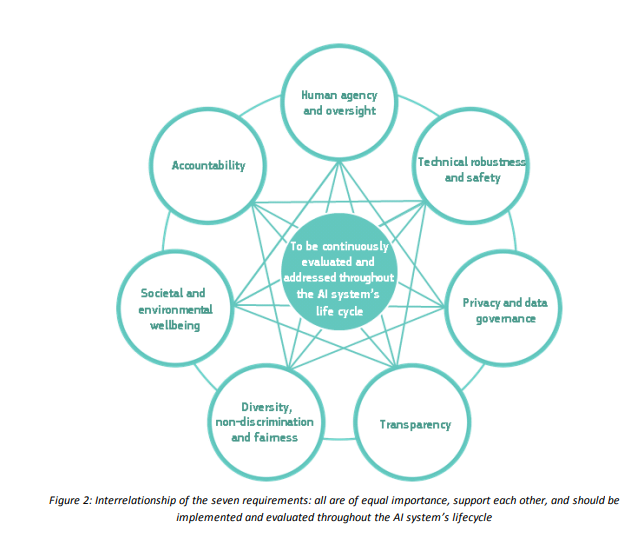

Please find the the non-exhaustive list of requirements from the EU AI and Ethics document, which will make AI trustworthy here.

Conversations about AI

Predictions and conversations about AI tend to lean towards the extreme’s. One extreme view predicts that AI will be the end of humanity (Stephen Hawking, Elon Musk). The other view is, AI will be the best invention which will bring untold prosperity to humans (Ray Kurzweil, Marvin Minsky). Without subscribing to these extreme views, every user of AI must engage in constructive conversations on it. Conversations will build collective understanding around ethics in AI . Thereby helping evolve a better point of view.

You already are a user of Artificial intelligence. If you are on social media, your feed is curated by AI algorithms. Your reading lists, music recommendations on Spotify, what to watch on Netflix or Amazon Prime are all determined by AI algorithms. If you applied for a job or a loan, chances are your application or resume was screened by an AI system, before a human got to see it.

Having established that you are user of AI , here are a few ways to begin conversations about AI systems and engage with these systems:

- Artificial Intelligence is all around us and is perhaps even modifying our behaviour. We are currently prioritising the convenience these AI systems bring to us, without thinking through on AI’s impact . Let us ‘be present’ to this nudging by the AI systems and look to step beyond mere convenience. Stepping away from curated feeds, looking for new sources of information. Exploring new areas and POV’s are all great places to start.

- How do these AI algorithms work. How do they know what we like and dislike. What logic does the loan approving algorithm use to decide which loan application to approve ? Are all good questions to ask. These will help us begin conversation on Artificial Intelligence . Even allowing for the chance to uncover if the AI has missed to consider any important element. Is the algorithm biased. Unethical even. When AI is viewed from this lens it becomes a conversation that every single one of us can contribute to. Rather than a code that a handful of AI programmers can build

- Explore the ‘possibilities of AI’ in conversations. Reflect and discuss on the repetitive or predictable tasks in your role or other roles in your organization. Even if there is no AI system built, yet for the role, seek discussions on possibilities. Any predictable or repetitive task will be swallowed head first by the AI systems in due course of time. Hence will be useful to explore how the role can be enhanced and made even more humane. Stay curious without being fearful of AI.

These are plausible first steps. Do share ways that you think humans can and should engage with AI, creating opportunities for growth and meaningful work for all.